Magic Glasses: From 2D to 3D

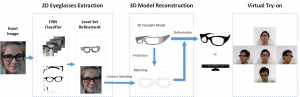

In this project, we design a virtual 3D eyeglasses try on system driven by a 2D Internet image of a human face wearing with a pair of eyeglasses. The main technical challenge of this system is the automatic 3D eyeglasses model reconstruction from the 2D glasses on a frontal human face. Against this challenge, we first propose an eyeglasses segmentation method using a Convolutional Neural Network (CNN) based parsing algorithm to label the glasses pixels, followed with a proposed symmetry based level-set optimization algorithm to refine the contour of the eyeglasses. With the precisely extracted silhouette image, we take advantages of the smoothness and the symmetry priors of the eyeglasses, and propose a silhouette based 3D deformation method to deform a 3D eyeglasses model selected from a pre-defined 3D model database. The obtained models is plausibly approximated to the input 2D eyeglasses after a texture mapping step. Finally, we develop a virtual try on system to interactively synthesize the reconstructed eyeglasses on a moving target face in real-time.

Members: Xiaoyun Yuan, Difei Tang, Yebin Liu, Qing Ling

Illustration

An example of virtual try on lady Gaga’s glasses

The pipeline of the proposed system. The input image is cropped and the eyeglasses contours are extracted by rough CNN classifier on the pixel level and then followed by a level set refinement. After the shape is obtained in the 2D extraction module, a template model is matched and deformed to reconstruct our final output model. The reconstructed model is put on the user’s face captured by Kinect.

Video

Publication

Xiaoyun Yuan, Difei Tang, Yebin Liu, Qing Ling and Lu Fang, “Magic Glasses: From 2D to 3D.”, accepted by IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), Special Issue on Augmented Video.